State DOT officials have crafted an Supplemental Environmental Assessment that conceals more than it reveals

The Rose Quarter traffic report contains no data on “average daily traffic” the most common measure of vehicle travel

Three and a half years later and ODOT’s Rose Quarter’s Traffic Modeling is still a closely guarded secret

The new SEA makes no changes to the regional traffic modeling done for the 2019 EA, which was done 7 years ago in 2015

The report misleadingly cites “volume to capacity ratios” without revealing either volumes or capacities

ODOT has violated its own standards for documenting traffic projections, and violated national standards for maintaining integrity of traffic projections.

In theory, the National Environmental Policy Act is all about disclosing facts. But in practice, that isn’t always how it works out. The structure and content of the environmental review is in the hands of the agency proposing the project, in this case the proposed $500 million widening of the I-5 Rose Quarter freeway in Portland. The Oregon Department of Transportation and the Federal Highway Administration have already decided what they want to do: Now they’re writing a set of environmental documents that are designed to put it in the best possible light. And in doing so, they’re keeping the public in the dark about the most basic facts about the project. In the case of the I-5 project, they haven’t told us how many vehicles are going to use the new wider freeway they’re going to build.

A traffic report without ADT is like a financial report without $

To take just one prominent example, the Project’s “Traffic Technical Report” which purports to discuss how the traffic will affect the flow of vehicles on the freeway—which after all, is the project’s purpose–conspicuously omits the most common and widely used metric of traffic volume: average daily traffic or ADT.

How common is ADT? It’s basically the standard yardstick of describing traffic. ODOT uses it to decide how wide roads should be. It’s the denominator in calculating road safety. Average daily traffic is also, not incidentally, the single most important variable in calculating how much carbon and other air pollutants cars will emit when they drive on this section of road. ODOT maintains a complicated system of recording stations and estimation, tracking traffic for thousands of road segments on highways. ODOT’s annual report, Traffic Volume Trends details average daily traffic for about 3,800 road segments statewide. It also turns out that predicted future ADT is an essential input into the crash modeling software that ODOT used to predict crash rates on the freeway (“ADT” appears 141 times in the model’s user manual). ODOT uses ADT numbers throughout the agency: Google reports that the Oregon DOT website has about 1,300 documents with the term “ADT” and nearly 1,000 with the term “average daily traffic.” Chapter 5 of ODOT’s Analysis Procedure Manual, last updated in July 2018, contains 124 references to the term “ADT” in just 55 pages. “Average daily traffic” as fundamental to describing traffic as degrees fahrenheit is to a weather report.

Three and a half years ago, we searched through the original “traffic analysis technical report” prepared for the Rose Quarter project. It had absolutely no references to ADT: The Rose Quarter I-5 Traffic Analysis Technical Report. We searched the PDF file of the report for the term “ADT”—this was the result:

ODOT just made public its “Traffic Analysis Supplemental Technical Report” for the Rose Quarter project’s Supplemental Environmental Assessment. We repeated our experiment and found . . . still no references to average daily traffic:

ADT is just the very prominent tip of much larger a missing data iceberg. There’s much, much more that’s baked in to the assumptions and models used to create the estimates in the report that simply isn’t visible or documented anywhere in the Environmental Assessment or its cryptic and incomplete appendices. The advocacy group No More Freeways have identified a series of documents and data series that are missing from the report and its appendices, and has filed a formal request to obtain this data. In March, 2019, just prior to the expiration of the public comment period, ODOT provided fragmentary and incomplete data on some hourly travel volumes, but again, no average daily traffic data. The new traffic technical report does not contain this data or updates of it.

No New Regional Modeling

The Traffic technical report makes it clear that ODOT has done nothing to update the earlier regional scale traffic modeling it did for the project. ODOT claims it used Metro’s Regional Travel Demand Model to generate its traffic forecasts for the i-5 freeway—but it has never published that models assumptions or results. And in the three years since the original report was published, it has done nothing to revisit that modeling. The traffic technical report says:

. . . the travel demand models used in the development of future traffic volumes incorporated into the 2019 Traffic Analysis Technical Report are still valid to be used for this analysis.

In its comments on the 2019 EA, a group of technical experts pointed out a series of problems with that modeling. Because ODOT made no effort to update or correct its regional modeling, all of those same problems pervade the modeling in the new traffic technical report.

Hiding results

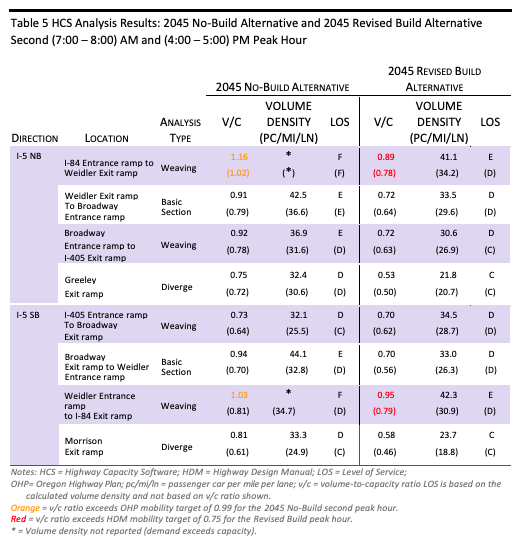

The supplemental traffic analysis presents its results in a way that appears designed to obscure, rather than reveal facts. Here is a principal table comparing the No-Build and Build designs.

Notice that the tables do not report actual traffic volumes, either daily (ADT) or hourly volumes. Instead, the table reports the “V/C” (volume to capacity) ratio. But because it reveals neither the volume, nor the capacity, readers are left completely in the dark as to how many vehicles travel through the area in the two scenarios. This is important because the widening of the freeway increases roadway capacity, but because ODOT reveals neither the volume nor the capacity, it’s impossible for an outside observer to discern how many more vehicles the project anticipates moving through the area. This, in turn, is essential to understanding the project’s traffic and environmental impacts. It seems likely that the model commits the common error of forecasting traffic volumes in excess of capacity (i.e. between I-84 and Weidler) in the No-Build. As documented by modeling expert Norm Marshall, predicted volumes in excess of capacity are symptomatic of modeling error.

ODOT is violating its own standards and professional standards by failing to document these basic facts

The material provided in the traffic technical report is so cryptic, truncated and incomplete that it is impossible to observe key outputs or determine how they were produced. This is not merely sloppy work. This is a clear violation of professional practice in modeling. ODOT’s own Analysis Procedures Manual (which spells out how ODOT will analyze traffic data to plan for highway projects like the Rose Quarter, states that the details need to be fully displayed:

6.2.3 DocumentationIt is critical that after every step in the DHV [design hour volume] process that all of the assumptions and factors are carefully documented, preferably on the graphical figures themselves. While the existing year volume development is relatively similar across types of studies, the future year volume development can go in a number of different directions with varying amounts of documentation needed. Growth factors, trip generation, land use changes are some of the items that need to be documented. If all is documented then anyone can easily review the work or pick up on it quickly without questioning what the assumptions were. The documentation figures will eventually end up in the final report or in the technical appendix.The volume documentation should include:• Figures/spreadsheets showing starting volumes (30 HV)• Figures/spreadsheets showing growth factors, cumulative analysis factors, or travel demand model post-processing.• Figures/spreadsheets showing unbalanced DHV• Figure(s) showing balanced future year DHV. See Exhibit 6-1• Notes on how future volumes were developed:o If historic trends were used, cite the source.o If the cumulative method was used, include a land use map, information that documents trip generation, distribution, assignment, in-process trips, and through movement (or background) growth.o If a travel demand model was used, post-processing methods should be specified, model scenario assumptions described, and the base and future year model runs should be attached

This is also essential to personal integrity in forecasting. The American Association of State Highway and Transportation Officials publishes a manual to guide its member agencies (including ODOT) in the preparation of highway forecasts. It has specific direction on personal integrity in forecasting. National Cooperative Highway Research Project Report, “Analytical Travel Forecasting Approaches for Project-Level Planning and Design,” NCHRP Report #765—which ODOT claims provides its methodology— states:

It is critical that the analyst maintain personal integrity. Integrity can be maintained by working closely with management and colleagues to provide a truthful forecast, including a frank discussion of the forecast’s limitations. Providing transparency in methods, computations, and results is essential. . . . The analyst should document the key assumptions that underlie a forecast and conduct validation tests, sensitivity tests, and scenario tests—making sure that the results of those tests are available to anyone who wants to know more about potential errors in the forecasts.

ODOT: A history of hiding facts from the public

This shouldn’t come as a surprise to anyone who has followed the Rose Quarter project. ODOT staff working on the project went to great lengths to conceal the actual physical width of the project they are proposing to build (it’s 160 feet wide, enough for a 10 lane freeway, something they still don’t reveal in the new EA, and which they don’t analyze in the traffic report). And an Oregon judge found that ODOT staff violated the state’s public records law by preparing a false copy of documents summarizing public comments on the Rose Quarter project. ODOT falsely claimed that a peer review group validated its claims that the project would reduce air pollution; the leader of that group said ODOT hadn’t provided any information about the travel modeling on which the pollution claims were based, and that the group couldn’t attest to their accuracy.

This kind of distortion about traffic modeling has a long history: the Oregon Department of Transportation has previously presented flawed estimates of carbon emissions from transportation projects. During the 2015 Oregon Legislature, the department produced estimates saying that a variety of “operational improvements” to state highways would result in big reductions in carbon. As with the Rose Quarter freeway widening, the putative gains were assumed to come from less stop and go traffic. Under questioning from environmental groups and legislators, the department admitted that its estimates of savings were overstated by a factor of at least three. ODOT’s mis-representations so poisoned the debate that it killed legislative action on a pending transportation package. What this case demonstrates is that the Oregon Department of Transportation is an agency that allows its desire to get funds to build projects to bias its estimates. That demonstrated track record should give everyone pause before accepting the results of an Environmental Assessment that conceals key facts.

Not presenting this data as part of the Environmental Assessment prevents the public from knowing, questioning and challenging the validity of the Oregon Department of Transportation’s estimates. In effect, we’re being told to blindly accept the results generated from a black box, when we don’t know what data was put in the box, or how the box generates its computations.

This plainly violates the spirit of NEPA, and likely violates the letter of the law as well. Consider a recent court case challenging the environmental impact statement for a highway widening project in Wisconsin. In that case, a group of land use and environmental advocates sued the US Department of Transportation, alleging that the traffic projections (denominated, as you might have guessed, in ADT) were unsubstantiated. The US DOT and its partner the Wisconsin Department of Transportation (WisDOT) had simply provided the final outputs of its model in the environmental report–but had concealed much of the critical input data and assumptions. A federal judge ruled that the failure to disclose this information violated NEPA:

In the present case, the defendants [the state and federal transportation departments] have not explained how they applied their methodology to Highway 23 in a way that is sufficient for either the court or the plaintiff to understand how they arrived at their specific projections of traffic volumes through the year 2035. They do not identify the independent projections that resulted from either the TAFIS regression or the TDM model and do not identify whether they made any adjustments to those projections in an effort to reconcile them or to comply with other directives, such as the directive that projected growth generally cannot be less than 0.5% or the directive to ensure that all projections make sense intuitively. For this reason, the defendants have failed to comply with NEPA. This failure is not harmless. Rather, it has prevented the plaintiffs from being able to understand how the defendants arrived at traffic projections that seem at odds with current trends. Perhaps the defendants’ projections are accurate, but unless members of the public are able to understand how the projections were produced, such that they can either accept the projections or intelligently challenge them, NEPA cannot achieve its goals of informed decision making and informed public participation.

1000 Friends of Wisconsin v. U.S. DOT, Case No. 11-C-054, Decision & Order, May 22, 2015

Why this matters

The entire rationale for this project is based on the assumption that if nothing is done, traffic will get much worse at the Rose Quarter, and that if this project is built things will be much better. But the accuracy of these models is very much in question.

This is also  important because the Environmental Assessment makes the provocative claim that this project–completely unlike any other urban freeway widening project in US history–will reduce both traffic and reduce carbon emissions. The academic literature on both questions is firmly settled, and has come to the opposite conclusion. The regularity with which induced demand swamps new urban road capacity and leads to even more travel and pollution is so well documented that it is now called “The Fundamental Law of Road Congestion.” The claims that freeway widening projects can offset carbon emissions by reduced idling have also been disproven: Portland State researchers Alex Bigazzi and Manuel Figliozzi have published one of a series of papers indicating that the reverse is true: wider roads lead to more driving and more carbon pollution, not less. And in addition to not providing data, there’s nothing in the report to indicate that the authors have produced any independent, peer-reviewed literature to support their claims about freeway widening. The agencies simply point at the black box.

important because the Environmental Assessment makes the provocative claim that this project–completely unlike any other urban freeway widening project in US history–will reduce both traffic and reduce carbon emissions. The academic literature on both questions is firmly settled, and has come to the opposite conclusion. The regularity with which induced demand swamps new urban road capacity and leads to even more travel and pollution is so well documented that it is now called “The Fundamental Law of Road Congestion.” The claims that freeway widening projects can offset carbon emissions by reduced idling have also been disproven: Portland State researchers Alex Bigazzi and Manuel Figliozzi have published one of a series of papers indicating that the reverse is true: wider roads lead to more driving and more carbon pollution, not less. And in addition to not providing data, there’s nothing in the report to indicate that the authors have produced any independent, peer-reviewed literature to support their claims about freeway widening. The agencies simply point at the black box.

Statistical errors are common and can easily lead to wrong conclusions

Maybe, just maybe, the Oregon Department of Transportation has a valid and accurate set of data and models. But absent disclosure, there’s no way for any third party to know whether they’ve made errors or not. And, even transportation experts, working with transportation data, can make consequential mistakes. Consider the recent report of the National Highway Traffic Safety Administration (another arm of the US Department of Transportation) which computed the crash rate for Tesla’s cars on autopilot. In a report released January 2017, NHTSA claimed that Tesla’s autopilot feature reduced crashes 40 percent.

That didn’t sound right to a lot of transportation safety experts, including a firm called Quality Control Systems. They asked NHTSA for the data, but were unable to get it, until the agency finally complied with a Freedom of Information Act (FOIA) request two years later. The result: NHTSA had made a fundamental math error in computing the number of vehicle miles traveled (which is actually an analog of Average Daily Traffic). Rather than being relatively safe, the corrected calculation showed that the auto-pilot feature is significantly more dangerous that the average of all human drivers.

What QCS found upon investigation, however, was a set of errors so egregious, they wrecked any predictive capability that could be drawn from the data set at all. In fact, the most narrow read of the most accurate data set would suggest that enabling Autosteer actually increased the rate of Tesla accidents by 59 percent.

A government agency hiding key data is putting the public behind the 8-ball

Without the opportunity to look at the data, there’s no way for anyone to check to see if ODOT has made a mistake in its calculations. A black box is no way to inform the public about a half billion dollar investment; you might say, it put us behind the 8-ball. It makes you wonder: What are they hiding?

Appendix: Expert Panel Critique of Rose Quarter Modeling

[pdf-embedder url=”https://cityobservatory.org/wp-content/uploads/2022/11/RQ-Model-Critique.pdf” title=”RQ Model Critique”]