Part 1: Resurrecting discredited data to paint a false history

The Texas Transportation Institute claims that traffic congestion is steadily getting worse. But its claims are based on resurrecting and repeating traffic congestion estimates from 1982 through 2009 that were based on a deeply flawed and biased model. Since 2009, TTI has used different data and a different estimation approach, which means it can’t make any accurate or reliable statements about whether today’s congestion is better or worse than a decade ago—or two or three decades ago.

Earlier, we went over some of the big problems with the Texas Transportation Institute’s (TTI) new “Urban Mobility Report.” Today, we want to focus on one claim in particular that’s been repeated by many media outlets: that traffic is worse now than before.

An Associated Press article, for example, highlights the Urban Mobility Report’s (UMR) claim that traffic is now worse than in 2007:

Overall, American motorists are stuck in traffic about 5 percent more than they were in 2007, the pre-recession peak, says the report from the Texas A&M Transportation Institute and INRIX Inc., which analyzes traffic data.

Four out of five cities have now surpassed their 2007 congestion.

And here’s the Wall Street Journal claiming that the UMR proves that traffic is worse now than at any time since at least 1982:

In a study set for release Wednesday, the university’s Texas Transportation Institute and Inrix, a data analysis firm, found traffic congestion was worse in 2014 than in any year since at least 1982.

The basis for this claim is that TTI’s 2014 measured level of congestion was higher than what TTI now reports for the entire period since 1982.

The problem is that the TTI has changed its methodology many times—fourteen, according to the authors’ own estimates. In 2009, it totally abandoned its two-decades-old approach, and began using traffic speed data gathered by the traffic monitoring firm Inrix. As a result, the post-2009 data simply aren’t comparable to the pre-2009 data, which means it’s not possible to truthfully claim that traffic is worse (or better) than it was before the recession or in 1982.

Prior to 2010, TTI used an entirely different—and now discredited—methodology to estimate the travel time index. Before the advent of real time speed monitoring, TTI could not directly measure the congestion it reported on. Instead, it built a mathematical model that predicted what the speed would be based on the volume of traffic on a road. It turns out that the model predicts that roads will automatically slow down as more traffic is added, an assumption that is not always correct. As total traffic volumes increased in the 1980s and 1990s, the TTI model mechanically converted higher volumes into lower speeds at the peak hour, and automatically generated a steadily increasing rate of congestion.

In 2010, we published “Measuring Urban Transportation Performance,” which showed that the TTI model lacked statistical validity. The modelers essentially ignored the underlying data and fitted their own relationship by eye. We also showed that the supposed speed declines generated by the model were inconsistent with real world data from the Census and transportation surveys which showed increased speeds. After 2009, UMR simply dropped this methodology. But they didn’t stop reporting the flawed pre-2009 data.

For the technically inclined, here are the details of the critique of the 1982-2009 TTI methodologies. Many transportation experts have noted that TTI’s extrapolation of speeds from volume data was questionable. Dr. Rob Bertini—who was for several years Deputy Administrator of US DOT’s Research and Innovative Technology Administration—warned that the lack of actual speed data undercut the reliability of the TTI claims:

No actual traffic speeds or measures extracted from real transportation system users are included, and it should be apparent that any results from these very limited inputs should be used with extreme caution.

Bertini, R. (2005). Congestion and its Extent. In D. Levinson & K. Krizek (Eds.), Access to Destinations: Rethinking the Transportation Future of our Region: Elsevier.

The report’s authors conceded that they “eyeballed” the data to choose their volume-speed relationship:

…when trying to determine if detailed traffic data resembles the accepted speed-flow model, interpretations by the researcher were made based on visual inspection of the data instead of a mathematical model.

Schrank, D., & Lomax, T. (2006). Improving Freeway Speed Estimation Procedures (NCHRP 20-24(35)). College Station: Texas Transportation Institute. (emphasis added)

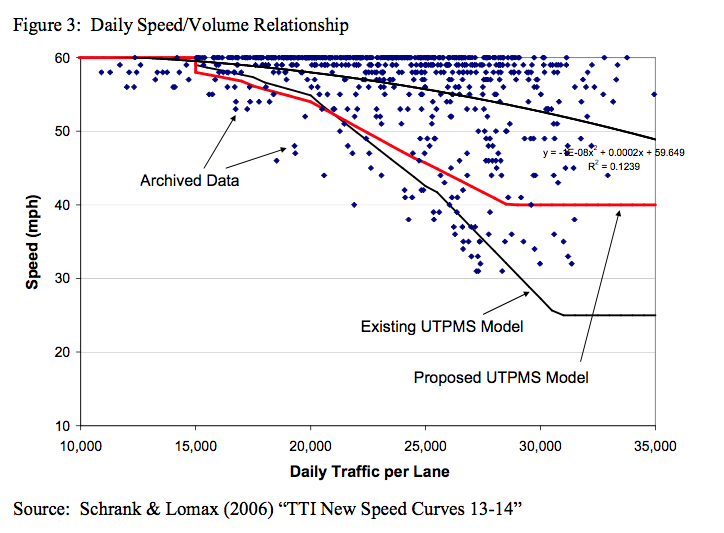

The data they used (blue diamonds) and the relationship that they guesstimated (the red line) are shown in the following chart:

The red line that the researchers drew by eyeballing the data not only has no mathematical basis, but predicts speeds that are slower 80 percent of the time than a simple quadratic curve (the downward-sloping black curve) fitted to the data. For example, the TTI model predicts that a road carrying 30,000 vehicles per lane per day will have an average speed of 40 miles per hour; the real world data show that actual speeds on roads with this volume average more than 50 miles per hour. This has the effect of biasing upward the estimates of delay associated with additional travel volumes.

The UMR authors can’t show that the congestion numbers that they estimated based on their flawed, pre-2009 model are in any way comparable to the post-2009 Inrix data. And in any event, the pre-2009 data is statistically unreliable. The data presented here can’t be squared with the fact that the average American is driving fewer miles today than any time since the late 1990s, and that travel surveys show we’re also spending fewer hours traveling. The bottom line is that there’s simply no basis for the claim that congestion is worse today than any time since 1982.

The fact that TTI makes this claim, and continues to publish its pre-2009 data even after the errors in its methodology have been documented, should lead policy makers—and journalists—to be extremely skeptical of its “Urban Mobility Report.” George Orwell famously observed in 1984 that “He who controls the past controls the future. He who controls the present controls the past.” A fictitious and incorrect history of transportation system performance will be a poor guide to future transportation policy.

In theory, TTI claims to have overcome these problems by switching to actual traffic speed data gathered by Inrix. Tomorrow, in Part 2 of this post, we’ll examine TTI’s analysis of the Inrix data to see what the real trend in traffic congestion has actually been in the era of big data.

Note: This post has been revised and expanded from a version published earlier on September 1.