At City Observatory, we’ve long been critical of some seemingly scientific studies and ideas that shape our thinking about the nature of our transportation system, and its performance and operation. We’ve pointed out the limitations of the flawed and out-dated “rules of thumb” that guide our thinking about trip generation, parking demand, road widths and other basics. One of the most pernicious and persistent data fables in the world of transportation, however, revolves around the statistics that are presented to describe the size, seriousness and growth of traffic congestion as a national problem. This week saw the latest installment in a perennial series of alarming, but actually un-informative reports about traffic congestion and its economic impacts.

The same old story

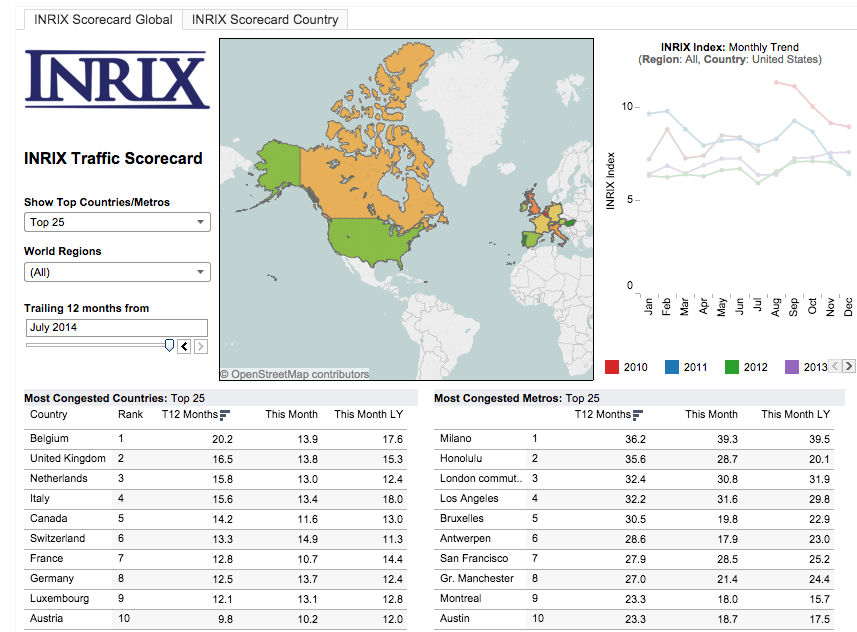

On March 15, traffic data firm Inrix released its 2015 Traffic Scorecard, ranking travel delays in the largest cities in Europe and North America. As is customary for the genre, it was trumpeted with a press release bemoaning the billions of hours that we waste in traffic. That, in turn, generated the predictable slew of doom-saying headlines:

- Houstonians wasted even more time in traffic in 2015, report shows

- Report: San Francisco has the third worst traffic in the country.

- U.S. Big Cities Now Among the Mos traffic jammed in the world

But at least a few journalists are catching on. At the Los Angeles Times, reporter Laura Nelson spoke with Herbie Huff from the UCLA transportation center who pointed out that “Aside from an economic downturn, the only way traffic will get better is if policymakers charge drivers to use the roads.”

And GeekWire headlined its story “Study claims Seattleites spend 66 hours per year in traffic, but some say that number’s deceptive” and reported Greater Greater Washington’s David Alpert as challenging the travel time index methodology used in the Inrix report.

Headlines aside, a close look at the content of this year’s report shows that on many levels, this year’s scorecard is an extraordinary disappointment.

As we’re constantly being told by Inrix and others, we’re on the verge of an era of “smart cities,” where big data will give us tremendous new insights into the nature of our urban problems and help us figure out better, more cost-effective solutions. And very much to their credit, Inrix and its competitors have made a wealth of real time navigation and wayfinding information available to anyone with a smart phone—which is now a majority of the population in rich countries. Driving is much eased by knowing where congestion is, being able to route around it (when that’s possible) and generally being able to calm down by simply knowing about how long a particular journey will take because of the traffic you are facing right now. It’s quite reassuring to hear Google Maps tell you “You are on the fastest route, you will arrive at your destination in 18 minutes.” This aspect of big data is working well.

By aggregating the billions of speed observations that they’re tracking every day, Inrix is in a position to tell us a lot about how well our highway system is working. That, in theory, is what the Scorecard is supposed to do. But in practice, it’s falling far short.

As impressive as the Inrix technology and data are, they’re only useful if they provide a clear and consistent basis for comparison. Are things measured in the same way in each city? Is one year’s data comparable with another? We and others have pointed out that the travel time index that serves as the core of the Inrix estimates is inherently biased against compact metropolitan areas with shorter travel distances, and creates the mistaken impression that travel burdens are less in sprawling, car-dependent metros with long commutes.

The end of history

For several years, it appeared that the Inrix work offered tremendous promise. They reported monthly data, on a comparable basis, using a nifty Tableau-based front end that let users track data for particular markets over time. You could see whether traffic was increasing or decreasing, and how your market stacked up against other cities. All this has simply been disappeared from the Inrix website—though you can still find it, with data through the middle of 2014, on an archived Tableau Webpage.

This year’s report is simply a snapshot of 2015 data. There’s nothing from 2014, or earlier. It chiefly covers the top ten cities, and provides a drill down format that identifies the worst bottlenecks in cities around the nation. It provides no prior year data that let observers tell whether traffic levels are better or worse than the year before. In addition, the description of the methodology is sufficiently vague that it’s impossible to tell whether this year’s estimates are in fact comparable to one’s that Inrix published last year.

Others in the field of using big data do a much better job of being objective and transparent in presenting their data. Take for example real estate analytics firm Zillow (like Inrix, a Seattle-based IT firm, started by former Microsoft employees). Zillow researchers make available and regularly update a monthly archive of their price estimates for different housing types for different geographies, including cities, counties, neighborhoods and zip codes. An independent researcher can easily download and analyze this data to see what Zillow’s data and modeling show about trends among and within metropolitan areas. Zillow still retains its individual, parcel-level data and proprietary estimating models, but contributes to broader understanding by making these estimates readily available. Consistent with its practice through at least the middle of 2014, Inrix ought to do the same—if it’s really serious about leveraging its big data to help tackle the congestion problem.

A Texas divorce?

For the past couple of years, Inrix has partnered closely with the Texas Transportation Institute (TTI), the researchers who for more than three decades have produced a nearly annual Urban Mobility Report (UMR). Year in and year out, the UMR has had the same refrain: traffic is bad, and it’s getting worse. And the implication: you ought to be spending a lot more money widening roads. Partly in response to critiques about the inaccuracy of the data and methodology used in earlier UMR studies, in 2010, the Texas Transportation Institute announced that henceforth it would be using the Inrix data to calculate traffic delay costs.

But this year’s report has been prepared solely by the team at Inrix, and has no mention of the the Texas Transportation Institute or the Urban Mobility Report in its findings or methodology. Readers of the last Inrix/TTI publication—released jointly by the two institutions last August—are left simply to wonder whether the two are still working together or have gone their separate ways. It’s also impossible to tell if the delay estimates contained in this year’s Inrix report are comparable to those in last year’s Inrix/TTI report. (If the two are comparable, then the report is implying that traffic congestion dropped significantly in Washington DC from 81 hours reported by TTI/Inrix last August, to the 75 hours reported by Inrix in this report).

Have a cup of coffee, and call me in the morning

As we pointed out last April, the kind of insights afforded by this kind of inflated and unrealistic analysis of costs—unmoored from any serious thought about the costs of expanding capacity sufficiently to reduce the hours spent in traffic—are really of no value in informing planning efforts or public policy decisions. We showed how, using the same assumptions and similar data about delays, one could compute a cappuccino congestion index that showed Americans waste billions of dollars worth of their time each year standing in line at coffee shops.

Inrix data have great potential, but a mixed record, when it comes to actually informing policy decisions. On the one hand, Inrix data was helpful in tracking speeds on the Los Angeles Freeway system, and showing that after the region had spent $1.1 billion to widen a stretch of I-405, that overall traffic speeds were no higher—seeming proof of the notion that induced demand tends to quickly erase the time-saving benefits of added capacity. In Seattle, Inrix’s claim that high occupancy toll lanes hadn’t improved freeway performance were skewered by a University of Washington report that pointed out that the Inrix technology couldn’t distinguish between speeds on HOT-lanes and regular lanes, and noted that Inrix had cherry-picked only the worst performing segments of the roadway, ignoring the road segments that saw speed gains with the HOT lane project.

This experience should serve as a reminder that by itself, data—even, or maybe especially, really big data—doesn’t easily or automatically answer questions. It’s important that data be transparent and widely accessible, so that when it is used to tackle a policy problem, everyone can be able to see and understand its strengths and limitations. The kind of highly digested data presented in this report card falls well short of that mark.

Our report card on Inrix

Here’s the note that we would write to Inrix’s parents to explain the “D” we’ve assigned to Inrix’s Report Card.

Inrix is a bright, promising student. He shows tremendous aptitude for the subject, but isn’t applying himself. He needs to show his work, being careful and thorough, rather than excitedly jumping to conclusions. Right now he’s a little bit more interested in showing off and drawing attention to his cleverness than in working out the correct answer to complicated problems. We’re confident that when he shows a little more self-discipline, scholarship and objectivity—and learns to play well with others—he’ll be able to be a big success.